CASE STUDY

Data Pipeline to support ML deployed services in Google Cloud Platform

The client is a 50+ years old higher education institution. The institute offers undergraduate, graduate, and doctoral degrees, in multiple streams like business management. Overwhelming majority of the students use the website and enroll for online courses.

The Opportunity

The client wanted to set up a cloud-based solution for collecting user level data, store data in a data warehouse, and build pipelines to connect with ML model. Ever increasing number of users on the website called for a significantly scalable and fault tolerant data pipelines and the connectors.

The Approach

Google Cloud platform as the core infrastructure

Google Cloud platform has different services that can be clubbed together as a unified platform to run production ETL pipelines, ad-hoc analytics, and machine learning that can auto scale. Google Cloud compute services were used for scheduled offline analysis, and serverless computing to launch Realtime connectors.

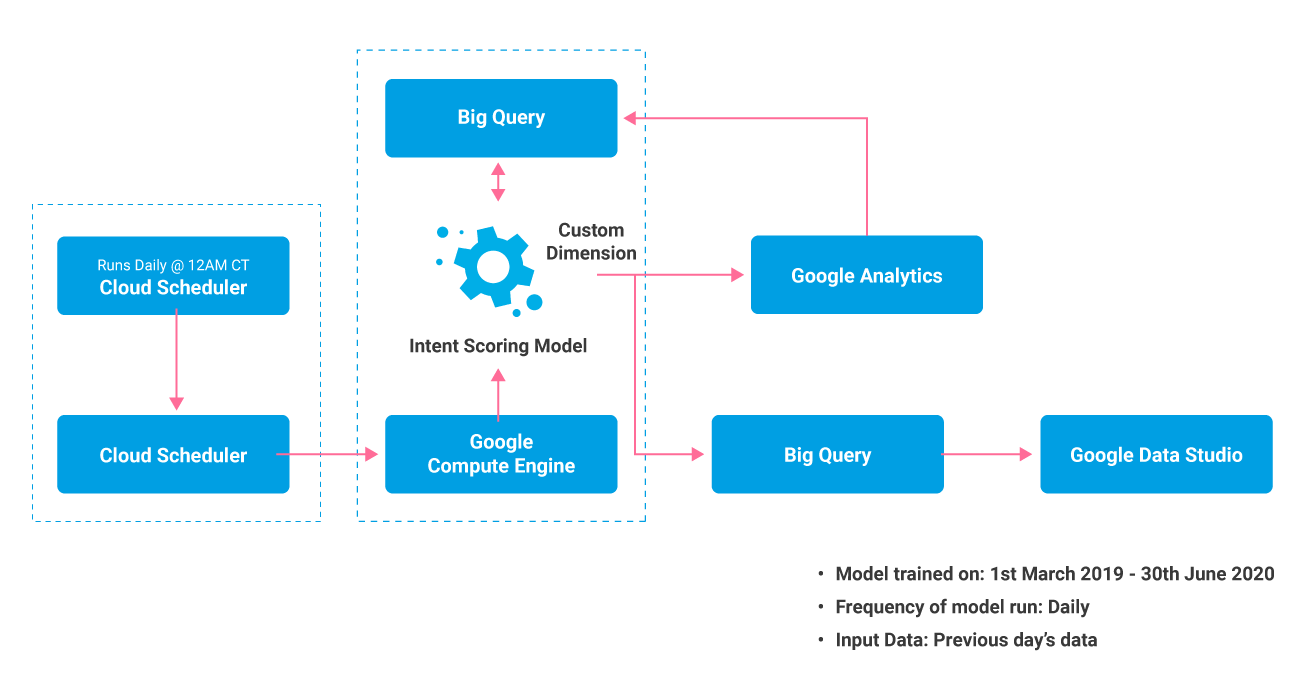

Data Pipelining

Initially the connector script ran to pull historical data for last 1 year and this data was used to train the model. This trained data was stored as pickle files in GCP. Later, on a daily basis, the connector fetched the data, cleansed it, and then stored it in the big query. ML models inside Cloud Run, fetched the data from big query, and scored it. The output of the scoring was uploaded to GA and stored in the Big Query as columnar data. This whole process and components were designed and deployed using Python.

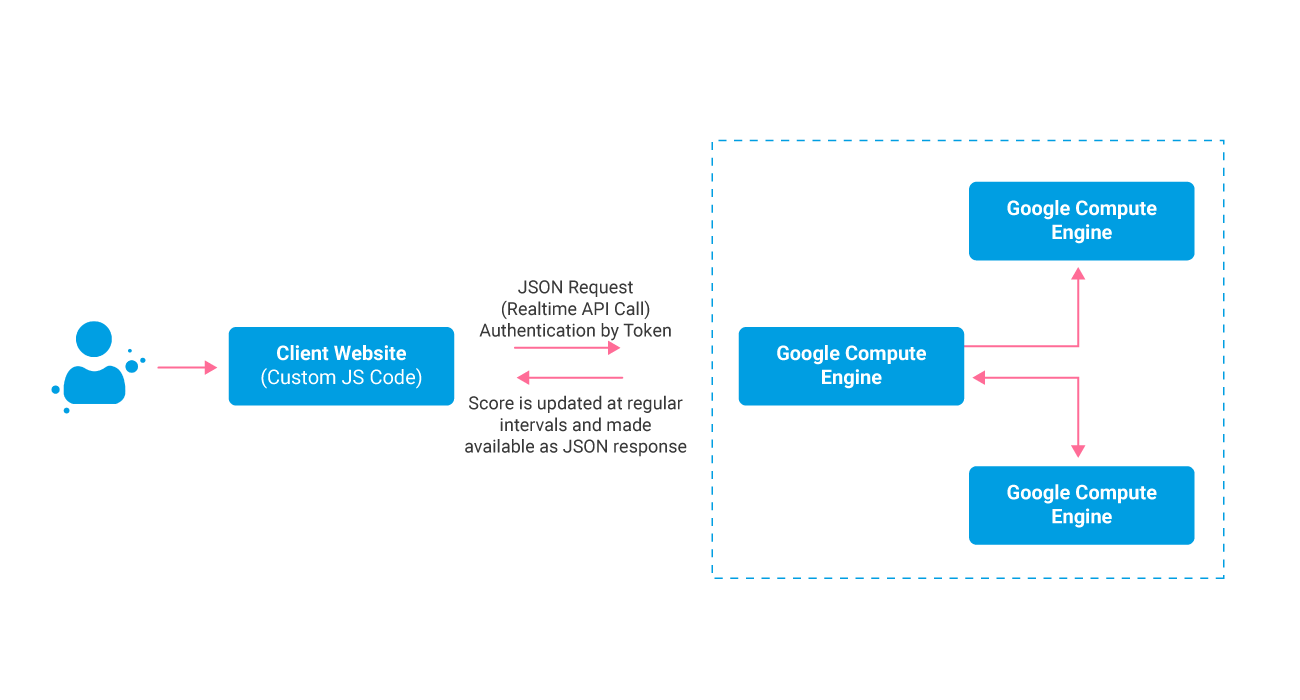

ML Implementation

ML Algorithm was released as an end point in GCP that could be called by authorized online tags, and, integrated with GA for further audience creation and reporting, as well as, with Data studio for model performance monitoring dashboards.

The Solution

Deployment 1 – Offline Model:

A well-built python-based connector hosted on cloud running on a daily basis, retrieving the latest Clickstream data form Google Analytics / Big Query, and providing an input for the ML model to calculate intent score and upload intent score value as custom dimension back into GA and Big Query. The offline model uses different services in Google Cloud platform like Cloud Function, Cloud Scheduler, Google Compute Engine and Big Query

Deployment 2 – Online real time model:

Python-based real-time connector hosted on cloud which act as scalable connectors to calculate intent score when user is on website.

Auto Refresh:

GCP data pipeline is a managed ETL service that can launch a virtual machine at a daily scheduled time to run the python-based connector to pull the data from GA API and process it , use it as input while executing the ML model, and push the output to Big Query and GA. It has a mechanism to alert admins, using email notification, if there is any failure in the pipeline or any error occurring during the ETL run.