CASE STUDY

Building a multi-channel funnel view for marketing attribution

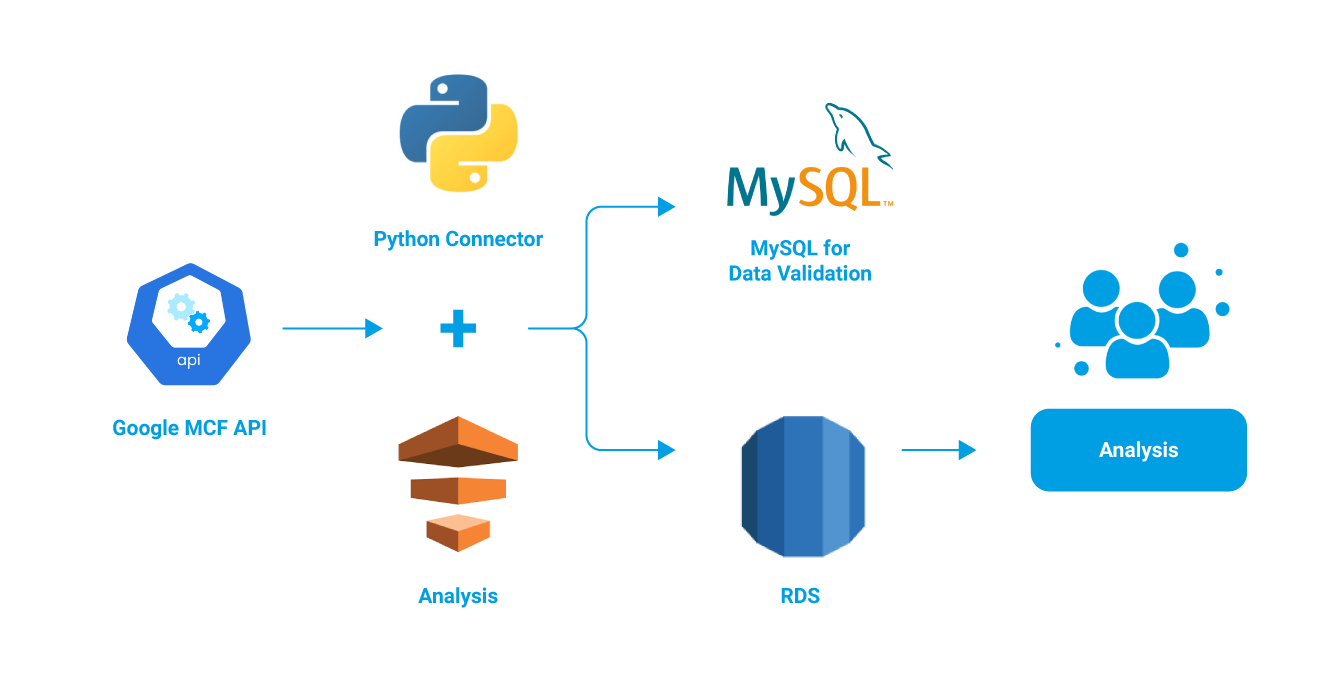

The client (a 20+ years old US based home décor business) depends heavily on digital marketing to generate traffic and demand on its e-commerce website. Thus, its paramount for the client to understand what kind of channel pathing is being most efficient in generating quality traffic and revenue lift. To be able to do such an analysis, the client was dependent heavily on Google’s MCF data, and its availability along with other marketing data to make analysis and dashboards comprehensive. Thus, there was an opportunity for the client to build a connector and get the Google’s MCF data as part of its marketing data warehouse in AWS RDS. The connector was coded in Python and it was hosted in AWS EC2. Inside the code, connector pulls the data using API that remits data in JSON format.

The Challenge

Google’s MCF Data extraction using an API had a few challenges. The data couldn’t be extracted for large date ranges, as there was a ceiling on the volume of data that could be extracted, and such a large data output was often sampled, and not the complete one. Thus, the connector had to be designed to pre-assess the possible volume of the data extract request, and then meter it accordingly. The connector also had to validate the output against a total expected record count from the available system of record, and if any discrepancy, self-correct and reattempt. This iterative data-pull required connector to be trained and optimized for different scenario handling. The connector was coded in Python and it was hosted in AWS EC2. Inside the code, connector pulls the data using API that remits data in JSON format.

Connector Build and Deploy

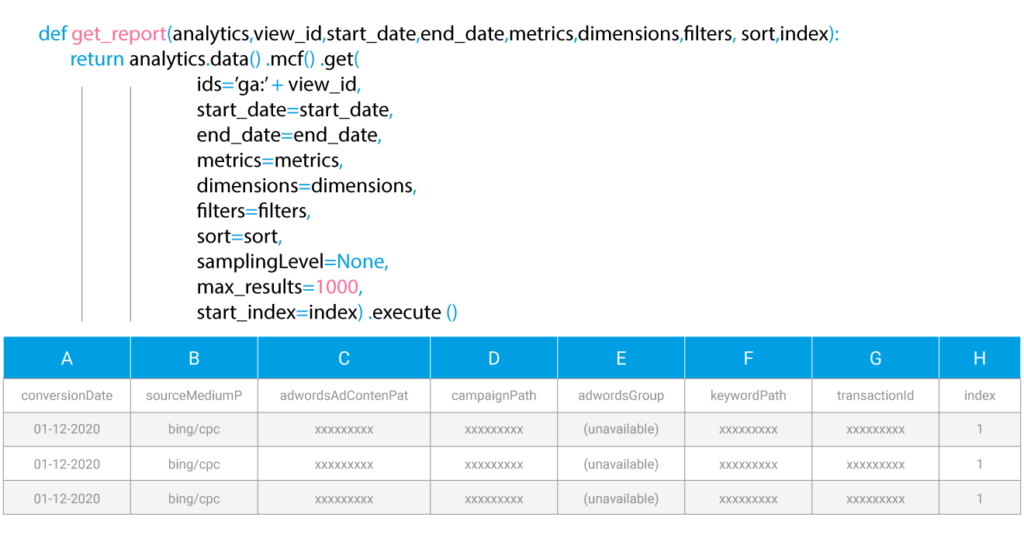

The connector was coded in Python and it was hosted in AWS Data Pipeline. Inside the code, connector pulls the data using API that remits data in JSON format. The connector then converts the json into readable tabular format and splits the nested data into multiple rows and assigns index. The data validation within the connector happens in two steps using MYSQL as temporaray database for validation and any root cause analysis. Once validated, connector inserts data into an RDS table.

The Solution

One-time historical pull

Initially the connector script ran to pull historical data for the last 3 years, processed the data, and pushed to RDS.

API sampling problem handling

Google MCF API samples the data if we request the data for large date ranges. So, the connector was programmed such way that even if the user requested large date range, internally script breaks down date range to individual dates and pull data against each day, and this approach reduces the sampling data from API.

Auto Refresh

AWS data pipeline is a managed ETL service that can launch a virtual machine at a daily scheduled time to run the python-based connector to pull the data from MCF API and process it and push the transformed data to an RDS table. It has an inbuilt mechanism to alert the user using SNS notification if there is any failure in pipeline or any error occurred during the ETL run.